Archive

VCAP6 Design Beta Exams are now available!

VMware has recently announced via the Education and Certification Blog, that the Beta Exams for the v6 VCAP’s are out or coming soon. Specific details can be found at https://blogs.vmware.com/education/2016/02/new-vcap6-beta-exams-now-available.html

Right now, you can sign up for the VCAP6-DCV Design or the VCAP6-CMA Design. I’ll probably be going only for the former. VCAP6-DTM Design for Desktop and Mobility won’t be out until later in Feb.

You’re going to need to be either a VCP6 or a VCP5+VCAP5 to qualify to be approved.

Exam cost is $100 USD, and I’d doubt any discounts would be applicable (eg: VMUG Advantage, etc) – but I’ll try once I’m approved. First appoinments will be Feb 15 2016, and there’s no indication how long they’ll run. Access is First Come, First Served, so if you’re interested – sign up now!

Fighting ESXi 6.0 Network Disconnects–might be KB2124669?

Recently I’ve been having some issues in my home lab where hosts seem to stop responding. I’ve been busy, so had a tough time finding the time to verify if the hosts crashed, locked up, or if it was just a networking issue. So quite unfortunately, I’ve been rebooting them to bring them back and keeping it on my To Do list.

Until it started happening at work. We have a standalone box that is non clustered that has internal disks and functions as a NAS. So it’s “very important”, but “not critical” – or it would be HA, etc. And the same symptoms occurred there – where we of course HAD to troubleshoot it.

Tried the following:

* Disconnect the NIC’s and reconnect

* Shut/no-shut the ports on the switch

* Access via IPMI/IDRAC – works, could access DCUI to verify host was up. Could ping VM’s on the host, so verified those were working.

* Tried removing or adding other NIC’s via the DCUI to the vSwitch that has the management network. No go. Didn’t matter if the two onboard NIC’s, two add in, split across two controllers, one NIC only, etc.

Understandably we’re pretty sure we have a networking issue – but only on the one host at this time. The Dell R610 and R620 have no similar issues, but this is an in-house built Supermicro we use for Dev. So the issue appears related to the host, and having only one, it’s tough to troubleshoot.

ESXi NIC’s are not the same – the host has 82574L onboard and 82571EB 4 port. The C6100 I have is 82576 – and I haven’t confirmed it is having the same issue. But in any case, it doesn’t seem like an issue with a certain chipset or model of NIC.

So we’ve started looking for others having similar issues and found:

https://communities.vmware.com/thread/517399

After upgrade of ESXi 5.5 to 6.0 server loses every few days the network connection

Reach out to VMware support on this issue to see if it is related to a known bug in ESXi 6. The event logged in my experience with this same situation is "netdev_watchdog:3678: NETDEV WATCHDOG". I did not see this in your logs but the failure scenario I have experienced is the same as you described.

Okay, not great, but sounds like the same sort of issue. Someone then suggested that the bug is known. Okay. Check the release notes and:

http://pubs.vmware.com/Release_Notes/en/vsphere/60/vsphere-esxi-60u1-release-notes.html

New Network connectivity issues after upgrade from ESXi 5.x to ESXi 6.0

After you upgrade from ESXi 5.x to ESXi 6.0, you might encounter the following issues

The ESXi 6.0 host might randomly lose network connectivity

The ESXi 6.0 host becomes non-responsive and unmanageable until reboot

After reboot, the issue is temporarily resolved for a period of time, but occurs again after a random interval

Transmit timeouts are often logged by the NETDEV WATCHDOG service in the ESXi host. You may see entries similar to the following in the/var/log/vmkernel.log file:

cpu0:33245)WARNING: LinNet: netdev_watchdog:3678: NETDEV WATCHDOG: vmnic0: transmit timed out

cpu0:33245)WARNING: at vmkdrivers/src_92/vmklinux_92/vmware/linux_net.c:3707/netdev_watchdog() (inside vmklinux)

The issue can impact multiple network adapter types across multiple hardware vendors. The exact logging that occurs during a transmit timeout may vary from card to card.

That sounds similar. We’re still digging through logs and also waiting for it to occur again to catch it in the act.

The next hit we found was:

https://communities.vmware.com/message/2525461#2525461

If you’re seeing this in your vmkernel.log at the time of the disconnect it could be related to an issue that will one day be described at the below link (it is not live at this time). We see this after a random amount of time and nothing VMware technical support could do except reboot the host helped.

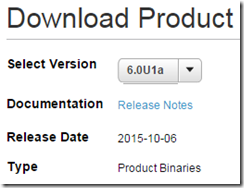

KB2124669 definitely covers the observed symptoms. Note the date:

- Updated: Oct 6, 2015

That’s yesterday, maybe we’re on the right track! Now also look at the RESOLUTION:

This issue is resolved in ESXi 6.0 Update 1a, available at VMware Downloads. For more information, see the VMware ESXi 6.0 Update 1a Release Notes.

There’s a 1*A* now?

There is in fact, a 6.0U1a out. Who knew! Released _yesterday_.

Now, watch the top of your screen, because VMware DOES attempt to make sure you know there’s some issues (as there always are, no new release is perfect):

http://kb.vmware.com/kb/2131738

And in that list, includes:

Also if you haven’t bumped into it before, there’s great links here for Upgrade Best Practices, Update Sequences, etc. DO give this a read.

Understandably, we won’t get this fix in TODAY. We do have a maintenance cycle coming up soon, where we’ll get vCenter Server 6.0U1 in and can go to ESXi v6.0U1a – and hopefully this will fix the network issue. If not, back to troubleshooting I guess. Fingers crossed.

I’ll post an update next week if we get the upgrade in and see a resolution to this.

HOWTO: Dell vSphere 6.0 Integration Bits for Servers

When I do a review of a vSphere site, I typically start by looking to see if best practices are being followed – then look to see if any of the 3rd party bits are installed. This post picks on Dell environments a little, but the same general overview holds true of HP, or IBM/Lenovo, or Cisco, or…. Everyone has their own 3rd party integration bits to be aware of. Perhaps this is the part where everyone likes Hyper Converged because you don’t have to know about this stuff. But as an expert administering it, you should at least be aware of it, if not an expert.

I’m not going to into details as to how to install or integrate these components. I just wanted to make a cheat sheet for myself, and maybe remind some folks that regardless of your vendor, make sure you check for the extra’s – its part of why you’re not buying white boxes, so take advantage of it. Most if it is free!

The links:

I’ve picked on a Dell PowerEdge R630 server, but realistically any 13G box would have the same requirements. Even older 11/12G boxes such as an R610 or R620 would. So first we start with the overview page for the R630 – remember to change that OS selection to “VMware ESXi v6.0”

http://www.dell.com/support/home/us/en/04/product-support/product/poweredge-r630/drivers

Dell iDRAC Service Module (VIB) for ESXi 6.0, v2.2

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=2XHPY

You’re going to want to be able to talk to and manage the iDRAC from inside of ESXi, so get the VIB to allow you to do so. This installs via VUM incredibly easy.

Dell OpenManage Server Administrator vSphere Installation Bundle (VIB) for ESXi 6.0, v8.2

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=VV2P2

Next, you’ll want to be able to handle talking to OMSA on the ESXi box itself, to get health, management, inventory, and other features. Again, this installs with VUM.

OpenManage™ Integration for VMware vCenter, v3.0

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=8V0JG

This will let your vCenter present you with various tools to manage your Dell infrastructure right from within vCenter. Installs as an OVF and is a virtual appliance, so no server required.

VMware ESXi 6.0

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=CG9FP

Your customized ESXi installation ISO. Note the file name – VMware-VMvisor-Installer-6.0.0-2809209.x86_64-Dell_Customized-A02.iso – based on the -2809209 and the –A02 and a quick Google search, you can see that this is v6.0.0b (https://www.vmware.com/support/vsphere6/doc/vsphere-esxi-600b-release-notes.html) vs v6.0U1.

Dell Systems Management Tools and Documentation DVD ISO, v.8.2

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=4HHMH

You likely will not need this for a smaller installation, but it can help out if you need to standardize, by allowing you to configure and export/import things like BIOS/UEFI, Firmware, iDRAC, LCC, etc settings. Can’t hurt to have around.

There is no longer a need for a “SUU” – Systems Update Utility, as the Lifecycle Controller built into ever iDRAC, even the Express will allow you to do updates from that device. I recommend doing them via the network as it is significantly less hassle than going through the Dell Repository Builder, and downloading your copies to a USB/ISO/DVD media and doing it that way.

Now, the above covers what you’ll require for vSphere. What is NOT immediately obvious is the tools you may want to use in Windows. Even though you now have management capability on the hosts and can see things in vCenter, you’re still missing the ability to talk to devices and manage them from Windows – which is where I spend all of my actual time. Things like monitoring, control, management, etc, all are done from within Windows. So let’s go ahead and change that OS to “Windows Server 2012 R2 SP1” and get some additional tools:

Dell Lifecycle Controller Integration 3.1 for Microsoft System Center Configuration Manager 2012, 2012 SP1 and 2012 R2

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=CKHYR

If you are a SCCM shop, you may very much want to be able to control the LCC via SCCM to handle hardware updates.

Dell OpenManage Server Administrator Managed Node(windows – 64 bit) v.8.2

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=6J8T3

Even though you’ve installed the OMSA VIB’s on ESXi, there is no actual web server there. So you’ll need to install the OMSA Web Server tools somewhere – could even be your workstation – and use that. You’ll then select “connect to remote node” and specify the target ESXi system and credentials.

Dell OpenManage Essentials 2.1.0

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=JW22C

If you’re managing many Dell systems and not just servers, you may want to go with OME if you do not have SCCM or similar. It’s a pretty good 3rd party SNMP/WMI monitoring solution as well. But will also allow you to handle remote updates of firmware, BIOS, settings, etc, on various systems – network, storage, client, thin client, etc.

Dell OpenManage DRAC Tools, includes Racadm (64bit),v8.2

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=9RMKR

RACADM is a tool I’ve used before and have some links on how to use remotely. But this tool can grandly help you standardize your BIOS/IDRAC settings via a script.

Dell Repository Manager ,v2.1

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=2RXX2

The repository manager as mentioned is a tool you can use to download only the updates required for your systems. Think of it like WSUS (ish).

Dell License Manager

http://www.dell.com/support/home/us/en/04/Drivers/DriversDetails?driverId=68RMC

The iDRAC is the same on every system it is only the licence that changes. To apply the Enterprise license, you’ll need the License Manager.

Hopefully this will help someone keep their Dell Environment up to date. Note that I have NOT called out any Dell Storage items such as MD3xxx, Equallogic PSxxxx, or Compellent SCxxxx products. If I tried to, the list would be significantly longer. Also worth noting is that some vendors _networking_ products have similar add ins, so don’t forget to look for those as well.

HOWTO: Fix Veeam v8.0 NFC errors with vSphere v6.0U1 using SSLv3

Recently updated vSphere to v6.0U1 from v6.0? Using Veeam Backup & Recovery v8.0.0.2030? Getting NFC storage issues like those below?

Specifically: ERR |SSL error, code: [336151568].error:14094410:SSL routines:SSL3_READ_BYTES:sslv3 alert handshake failure

You can find out more about this on the Veeam Forums at: http://forums.veeam.com/vmware-vsphere-f24/vsphere-6-0-u1-t30210.html

The high level explanation is that Veeam is using both TLS and SSLv3 components to connect to VMware – and VMware has disabled the SSL method in v6.0U1. There is a bug in how Veeam is auto-detecting SSL or TLS connectivity, causing this issue. Other VMware products are having similar issues talking to their own products, from what I understand.

Veeam has a KB2063 on the issue here: http://www.veeam.com/kb2063 You have two options – call in and request a private out of band hotfix from Veeam, or make changes on the VMware side.

The VMware KB20121021 discusses how you can make these changes: http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2121021

The high level gist is:

· Add "enableSSLv3: true" to /etc/sfcb/sfcb.cfg and then: /etc/init.d/sfcbd-watchdog restart

· Add "vmauthd.ssl.noSSLv3 = false" to /etc/vmware/config and then: /etc/init.d/rhttpproxy restart

I’ve whipped up a quick BASH script that seems to work in my testing. It will:

· see if the desired option exists and exits

· if the options exists, but is the opposite setting (true vs false, etc) it will flip the setting

· if the option does not exist, it will add it

TEST IT BEFORE YOU RUN IT IN YOUR ENVIRONMENT, I’m not responsible if it does wonky things to you.

Applying the changes does NOT require Maintenance Mode on the hosts, or any Veeam service restarting. You can simply “retry” the job on the Veeam server, and “It Just Works”

This will likely be resolved by end of September when Veeam releases the next update to Veeam B&R – or there may be a vSphere v6.0U1a released. Once the Veeam fix is released, it may be prudent to reverse or disable these changes on your hosts so you can use TLS vs SSL.

==== BEGIN VeeamNFCFix.sh =====

#

# Actions recommendations in http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2121021

#

#

# Look for "enableSSLv3: true" in /etc/sfcb/sfcb.cfg

#

cp /etc/sfcb/sfcb.cfg /etc/sfcb/sfcb.cfg.old

if grep -q -i "enableSSLv3: true" /etc/sfcb/sfcb.cfg; then

echo "true found – exiting"

else

if grep -q -i "enableSSLv3: false" /etc/sfcb/sfcb.cfg; then

echo "false found – modifying"

sed -i ‘s/enableSSLv3: false/enableSSLv3: true/g’ /etc/sfcb/sfcb.cfg

else

echo "false not found – adding true"

grep -i "enableSSLv3: true" /etc/sfcb/sfcb.cfg || echo "enableSSLv3: true" >> /etc/sfcb/sfcb.cfg

fi

fi

/etc/init.d/sfcbd-watchdog restart

#

# Look for "vmauthd.ssl.noSSLv3 = false" in /etc/vmware/config

#

cp /etc/vmware/config /etc/vmware/config.old

if grep -q -i "vmauthd.ssl.noSSLv3 = false" /etc/vmware/config; then

echo "false found – exiting"

else

if grep -q -i "vmauthd.ssl.noSSLv3 = true" /etc/vmware/config; then

echo "true found – modifying"

sed -i ‘s/vmauthd.ssl.noSSLv3 = true/vmauthd.ssl.noSSLv3 = false/g’ /etc/vmware/config

else

echo "true not found – adding false"

grep -i "vmauthd.ssl.noSSLv3 = false" /etc/vmware/config || echo "vmauthd.ssl.noSSLv3 = false" >> /etc/vmware/config

fi

fi

/etc/init.d/rhttpproxy restart

==== END VeeamNFCFix.sh =====

C6100 IPMI Issues with vSphere 6

So I’m not 100% certain if the issues I’m having on my C6100 server are vSphere 6 related or not. But I have seen similar issues before in my lab, so it may be one of a few things.

After a recent upgrade, I noted that some of my VM’s seemed “slow” – which is hard to quantify. Then this morning I wake up to having internet but no DNS, so I know my DC is down. Hosts are up though. So I give them a hard boot, connect to the IPMI KVM, and watch the startup. To see “loading IPMI_SI_SRV…” and it just sitting there.

In the past, this seemed to be related to a failing SATA disk, and the solution was to pop it up – which helped temporarily until I replaced the disk outright. But these are new drives. Trying the same here did not work, though I only tried the spinning disks and not the SSD’s. Rather than mess around, I thought I’d find a way to see if I could disable IPMI at least to troubleshoot.

Turns out, I wasn’t alone – though just not specific to vSphere 6:

https://communities.vmware.com/message/2333989

https://xuri.me/2014/12/06/avoid-vmware-esxi-loading-module-ipmi_si_drv.html

That last one is the option I took:

- Press SHIFT+O during the Hypervisor startup

- Append “noipmiEnabled” to the boot args

Which got my hosts up and running.

I haven’t done any deeper troubleshooting, nor have I permanently disabled the IPMI with the options of:

Manually turn off or remove the module by turning the option “VMkernel.Boot.ipmiEnabled” off in vSphere or using the commands below:

# Do a dry run first:

esxcli software vib remove –dry-run —vibname ipmi–ipmi–si–drv

# Remove the module:

esxcli software vib remove —vibname ipmi–ipmi–si–drv

We’ll see what comes when I get more time…

Modifying the Dell C6100 for 10GbE Mezz Cards

In a previous post, Got 10GbE working in the lab – first good results, I talked about getting 10GbE working with my Dell C6100 series. Recently, a commenter asked me if I had any pictures of the modifications I had to make to the rear panel to make these 10GBE cards work. As I have another C6100 I recently acquired (yes, I have a problem…), that needs the mods, it seems only prudent to share the steps I took in case it helps someone else.

First a little discussion about what you need:

- Dell C6100 without the rear panel plate to be removed

- Dell X53DF/TCK99 2 Port 10GbE Intel 82599 SFP+ Adapter

- Dell HH4P1 PCI-E Bridge Card

You may find the Mezz card under either part number – it seems that the X53DF replaced the TCK99. Perhaps one is the P/N and one is the FRU or some such. But you NEED that little PCI-E bridge card. It is usually included, but pay special attention to the listing to ensure it does. What you DON’T really need, is the mesh back plate on the card – you can get it bare.

Shown above are the 2pt 10GbE SFP+ card in question, and also the 2pt 40GbE Infiniband card. Above them both is the small PCI-E bridge card.

You want to remove the two screws to remove the backing plate on the card. You won’t be needing it, and you can set it aside. The screws attach through the card and into the bracket, so once removed, reinsert the screws to the bracket to keep from losing them.

Here we can see the back panel of the C6100 sled. Ready to go for cutting.

You can place the factory rear plate over the back plate. Here you can see where you need to line it up and mark the cuts you’ll be doing. Note that of course the bracket will sit higher up on the unit, so you’ll have to adjust for your horizontal lines.

If we look to the left, we can see the source of the problem that causes us to have to do this work. The back panel here is not removable, and wraps around the left corner of the unit. In systems with the removable plate, this simply unscrews and panel attached to the card slots in. In the right hand side you can see the two screws that would attach the panel and card in that case.

Here’s largely what we get once we complete the cuts. Perhaps you’re better with a Dremel than I am. Note that the vertical cuts can be tough depending on the size of the cutting disk you have, as they may have interference from the bar to remove the sled.

You can now attach the PCI-E bridge card to the Mezz card, and slot it in. I found it easiest to come at about 20 degree angle and slot in the 2 ports into the cut outs, then drop the PCI-E bridge into the slot. When it’s all said and done, you’ll find it pretty secure and good to go.

That’s really about it. Not a whole lot to it, and if you have it all in hand, you’d figure it out pretty quick. This is largely to help show where my cut lines ended up compared tot he actual cuts and where adjustments could be made to make the cuts tighter if you wanted. Also, if you’re planning to order, but are not sure if it works or is possible, then this is going to help out quite a bit.

Some potential vendors I’ve had luck with:

http://www.ebay.com/itm/DELL-X53DF-10GbE-DUAL-PORT-MEZZANINE-CARD-TCK99-POWEREDGE-C6100-C6105-C6220-/181751541002? – accepted $60 USD offer.

http://www.ebay.com/itm/DELL-X53DF-DUAL-PORT-10GE-MEZZANINE-TCK99-C6105-C6220-/181751288032?pt=LH_DefaultDomain_0&hash=item2a513890e0 – currently lists for $54 USD, I’m sure you could get them for $50 without too much negotiating.

EVALExperience now includes vSphere 6!

I know I’ve had both a lot of local VMUG members as well as forum members where I frequent, asking about when vSphere 6, vCenter 6, and ESXi 6 would be available as part of EVALExperience – as understandably, people are anxious to get their learning, labbing, and testing on.

I’m happy to announce that it looks like it’s up. If you head over to the VMUG page found at http://www.vmug.com/p/cm/ld/fid=8792 you’ll note that they show:

NEW! vSphere 6 and Virtual SAN 6 Now Available!

Of course, if you’ve signed up with VMUG, you should be getting the e-mail I just received as well. I’m not certain if it would go to all VMUG Members, only those that are already EVALExperience subscribers, or what.

What is now included, as per the e-mail blast is:

NOW AVAILABLE! VMware recently announced the general availability of VMware vSphere 6, VMware Integrated OpenStack and VMware Virtual SAN 6 – the industry’s first unified platform for the hybrid cloud! EVALExperience will be releasing the new products and VMUG Advantage subscribers will be able to download the latest versions of:

- vCenter Server Standard for vSphere 6

- vSphere with Operations Management Enterprise Plus

- vCloud Suite Standard

- Virtual SAN 6

- *New* Virtual SAN 6 All Flash Add-On

- It is worth noting that the product download has been updated and upgraded. They do call out that the old product and keys will no longer be available. I can understand why, as part of this will be to help members stay current. But it would be nice if you could use the N-1 version for a year of transition, etc. Not everyone can cut over immediately and some people use their home labs to mirror the production environment at work so they can come home and try something they couldn’t at the office.

- Some questions I’ve had asked, and the answers I’m aware of:

- How many sockets are included? The package includes 6 sockets for 3 hosts.

- Are the keys 365 days or dated expiry? I understand they’re dated expiry, so if you install a new lab 2 weeks before the end of your subscription, you’ll see 14 days left, not 364.

- What about VSAN? There had previously been a glitch which gave only one host worth of licences – which clearly does not work. This has been corrected.

- Just a friendly reminder, as a VMUG leader to look into the VMUG Advantage membership. As always, VMUG membership itself is free, come on down and attend a local meeting (the next Edmonton VMUG is June 16 and you can sign up here – http://www.vmug.com/p/cm/ld/fid=10777).

In addition, your VMUG Advantage subscriber benefits include:

- FREE Access to VMworld 2015 Content

- 20% discount on VMware Certification Exams & Training Courses (If you have a $3500 course you need/want, plus a $225 exam, for $3725 total, spending $200 or so on a VMUG Advantage to make your costs $2800+$180=$2980 is a great way to get $745 off. This is the sell you should be giving your employer

)

) - $100 discount on VMworld 2015 registration (This is the only “stackable” discount for VMworld. Pre-registration/early-bird ends on June 8th I believe)

- 35% discount on VMware’s Lab Connect

- 50% discount on Fusion 7 Professional

- 50% discount on VMware Player 7 Pro

- 50% discount on VMware Workstation 11

- Happy labbing, and if you’re local, hope to see you on June 16!

Registration Now Open: Edmonton VMUG Meeting June 16

Hey all my local Edmonton VMUG people! Registration is now open for our next VMUG, on Tuesday June 16.

Registration Now Open: Edmonton VMUG Meeting

Sponsors will be:

- Scalar with Nutanx – and I’m sure there will be many questions about Nutanix Community Edition!

- Zerto

We’ll also be dragging someone kicking and screaming (sounds like me) to do some talking about some Tales from the Trenches again and things we’ve run into and seen. Maybe you’ve run into them, maybe you haven’t. Maybe you have some to share. If you have some notes that you’re not willing to present, do reach out to me, I’m happy to present them to the crowd on your behalf.

Hope to see you there!

IBM RackSwitch–40GbE comes to the lab!

Last year, I had a post about 10GbE coming to my home lab (https://vnetwise.wordpress.com/2014/09/20/ibm-rackswitch10gbe-comes-to-the-lab/). This year, 40GbE comes!

This definitely falls into the traditional “too good to pass up” category. A company I’m doing work for picked up a couple of these, and there was enough of a supply that I was able to get my hands on a pair for a reasonable price. Reasonable at least after liquidating the G8124’s from last year. (Drop me a line, they’re available for sale! ![]() )

)

Some quick high level on these switches, summarized from the IBM/Lenovo RedBooks (http://www.redbooks.ibm.com/abstracts/tips1272.html?open):

- 1U Fully Layer 2 and Layer 3 capable

- 4x 40Gbe QSFP+ and 48x 10GbE SFP+

- 2x power supply, fully redundant

- 4x fan modules, also hot swappable.

- Mini-USB to serial console cable (dear god, how much I hate this non-standard part)

- Supports 1GbE Copper Transceiver – no issues with Cisco GLC-T= units so far

- Supports Cisco Copper TwinAx DAC cabling at 10GbE

- Supports 40GbE QSFP+ cables from 10GTek

- Supports virtual stacking, allowing for a single management unit

Everything else generally falls into line with the G8124. Where those are listed as “Access” switches, these are listed as “Aggregation” switches. Truly, I’ll probably NEVER have any need for this many 10GbE ports in my home lab, but I’ll also never run out. Equally, I now have switches that match production in one of my largest environments, so I can get good and familiar with them.

I’m still on the fence about the value of the stacking. While these are largely going to be used for ISCSI or NFS based storage, stacking may not even be required. In fact there’s an argument to be made about having them be completely segregated other than port-channels between them, so as to ensure that a bad stack command doesn’t take out both. Also the Implementing IBM System Networking 10Gb Ethernet Switches guide, it shows the following limitations:

When in stacking mode, the following stand-alone features are not supported:

Active Multi-Path Protocol (AMP)

BCM rate control

Border Gateway Protocol (BGP)

Converge Enhanced Ethernet (CEE)

Fibre Channel over Ethernet (FCoE)

IGMP Relay and IGMPv3

IPv6

Link Layer Detection Protocol (LLDP)

Loopback Interfaces

MAC address notification

MSTP

OSPF and OSPFv3

Port flood blocking

Protocol-based VLANs

RIP

Router IDs

Route maps

sFlow port monitoring

Static MAC address addition

Static multicast

Uni-Directional Link Detection (UDLD)

Virtual NICs

Virtual Router Redundancy Protocol (VRRP)

That sure seems like a lot of limitations. At a glance, I’m not sure anything there is end of the world, but it sure is a lot to give up.

At this point, I’m actually considering filling a number of ports with GLC-T’s and using that for 1GbE. A ‘waste’, perhaps, but if it means I can recycle my 1GbE switches, that’s an additional savings. If anyone has a box of them they’ve been meaning to get rid of, I’d be happy to work something out.

Some questions that will likely get asked, that I’ll tackle in advance:

- Come on, seriously – they’re data center 10/40GbE switches. YES, they’re loud. They’re not, however, unliveable. They do quite down a bit after warm up, where they run everything at 100% cycle to POST. But make no mistake, you’re not going to put one of these under the OfficeJet in your office and hook up your NAS to it, and not shoot yourself.

- Power is actually not that bad. These are pretty green, and drop power to unlit ports. I haven’t hooked up a Kill-a-Watt to them, but will tomorrow. They’re on par with the G8124’s based on the amp display on the PDU’s I have them on right now.

- Yes, there are a couple more

To give you a ballpark, if you check eBay for a Dell PowerConnect 8024F and think that’s doable – then you’re probably going to be interested. You’d lose the 4x10GBaseT combo ports, but you’d gain 24x10GbE and 4x 40GbE.

To give you a ballpark, if you check eBay for a Dell PowerConnect 8024F and think that’s doable – then you’re probably going to be interested. You’d lose the 4x10GBaseT combo ports, but you’d gain 24x10GbE and 4x 40GbE. - I’m not sure yet if there are any 40GbE compatible HBA – just haven’t looked into it. I’m guessing Mellanox ConnectX-3 might do it. Really though, even at 10GbE, you’re not saturating that without a ton of disk IO.

More to come as I build out various configurations for these and come up with what seems to be the best option for a couple of C6100 hosts.

Wish me luck!

vSphere 6 CBT Bug–and patch now available.

Recently a CBT bug was identified with vSphere (http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2090639) which involved VMDK disks extended beyond a 128GB boundary. I originally found out about it via a Veeam weekly newsletter, and in Veeam Backup & Recovery v8.0 Update 2, a workaround was available.

It seems there is also a specific bug related to vSphere 6 specifically. (http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2114076) This bug doesn’t require extending the VMDK, as it relates only to CBT. The workaround here seemed to be to disable CBT – which of course affected backup windows in a big way.

This bug is now fixed in http://kb.vmware.com/kb/2116125, and should be downloaded, tested, and verified in your environments if you’re an early adopter of vSphere 6.

You should be able to find patch in VUM, so apply soon.